PicToPlate:

From Ingredients to Recipes with Retrieval Augmented Generation

UC San Diego Data Science Capstone Project

Try out the product here!

Read our report here!

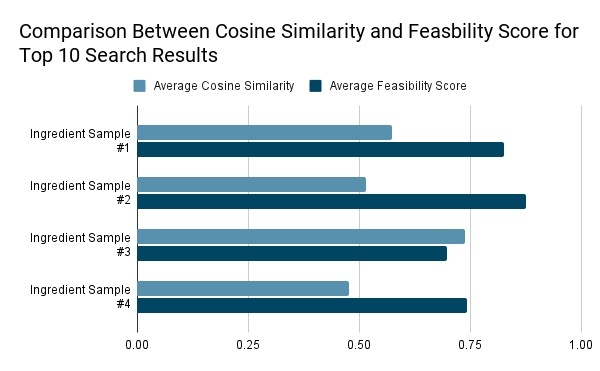

The process of deciding what and how to cook, especially when trying to optimize the use of available ingredients, can be confusing and time-consuming. Our product will address this practical need in everyday life by leveraging the power of object detection and large language models to simplify and enhance the cooking experience for home cooks. Utilizing GPT-4 Vision, our product will allow users to input an image of groceries, create a list of available ingredients, and then search a database of recipes that use those ingredients. Additionally, an AI chatbot will guide users through each step of the process, such as allowing user input for additional ingredients and guiding them through the steps of the recipe. This innovative approach combines computer vision, search, and large language models to enhance the user experience in the kitchen.